Intelligence, knowledge and control

Growing fears

We are just a couple of months into an AI-frenzy that started with the release of Chat-GPT and already influential people and some insiders from the AI community are calling for a moratorium on training even larger models. Specifically, they are asking the AI community (the likes of Google, Microsoft, Meta, Baidu, OpenAI, Anthropic, Cohere.ai etc) to

“… step back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities”.

Pause Giant AI Experiments: An Open Letter

The key issue here is the fear of the unknown that gets triggered by the emergent capabilities that seem to trump our expectations every time a larger model is released.

So what makes intelligent machines so powerful that we have to be mindful, if not afraid, of them? Intuiting the answer based on visual imagery (originating from Matrix, Blade Runner and postapocalyptic movies, no doubt) it seems obvious enough: people will loose their jobs and become pets, slaves, plugins or batteries for the dominant robot race. Looking at the problem on a more abstract and mechanistic level, however, it is far from clear that the growth of artificial intelligence is bound to lead us to desperation and ruin. Accordingly, it would be nice to see the boundary conditions that are likely to cause our descent into an apocalyptic abyss (before we hit them).

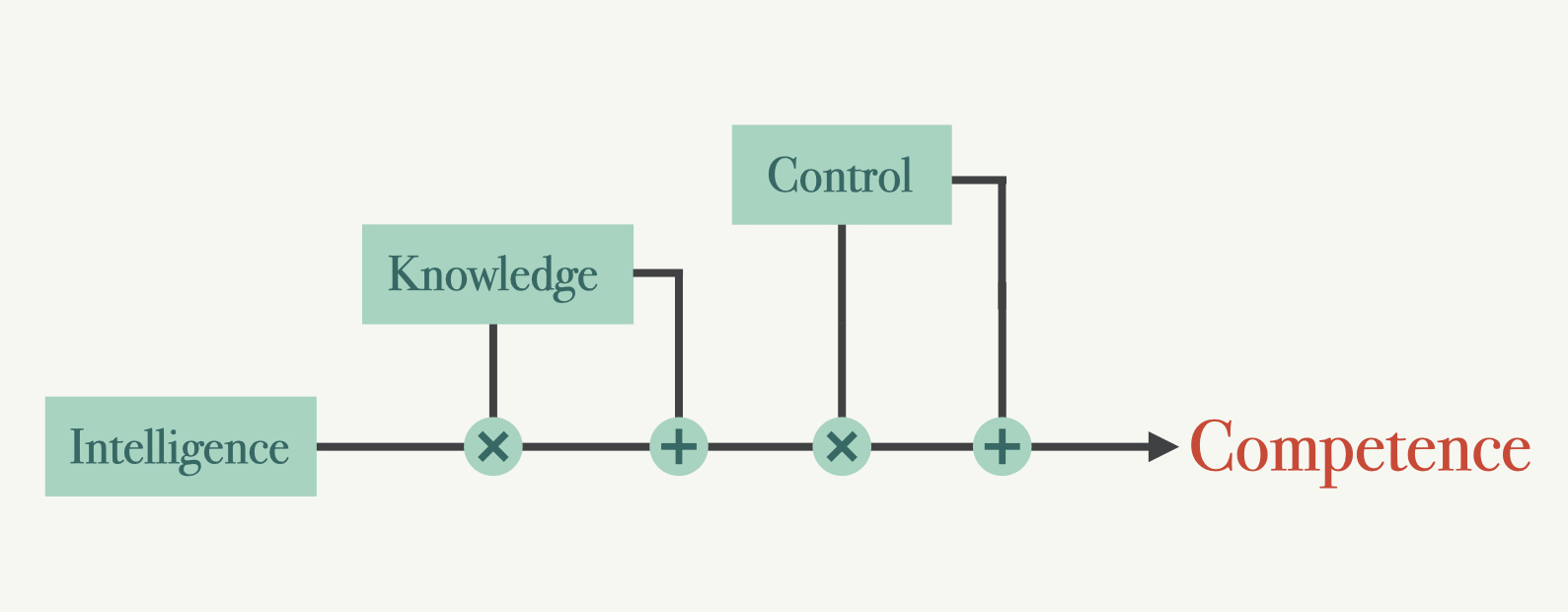

My goal here is to suggest that the endgame is decided in the interaction between intelligence, knowledge and control. All of these factors are relevant to the outcome and there exists a particular hierarchy in the way they come together.

Intelligence, knowledge and control

I will define intelligence informally as an agent’s problem-solving ability. You can think of it as the ability to represent your current situation well enough to identify the most optimal next action. For a more rigorous treatment of the machine intelligence topic check out Shane Legg’s utterly enjoyable PhD thesis.

The degree of intelligence exhibited by agent $A$ on task $X$ in environment $Z$ is the highest when the agent performs a sequence of actions that is most likely to lead to the completion of task $X$. Task $X$ is nothing more than a pair of states $(s_0, s_{goal})$ that determine the initial situation and the end goal. Problem is solved when agent reaches $s_{goal}$ after starting out from $s_0$. We could use expression $I_A^Z(X,Y|Z)$ to represent the idea that the intelligence exhbited by agent A in environment Z is a function of the task $X$ and task-relevant information $Y$ (knowledge). It does not matter whether $Y$ is obtained “on the spot”, it is a collection of past experiences or a combination of both. In addition, we will assume that any gadgets (devices, tools etc) the agent might use to reach the goal state also represent knowledge. This makes sense, because the gadgets are just physical manifestations of the knowledge that engineers have distilled into them. Accordingly, if Archimedes could clone himself and time travel into the future where he obtains a light-sabre, the Archimedes from the future would possess more knowledge than his antique counterpart (at least as long as he knows how to use the light-sabre).

The number of available actions that the agent can take in each state leading up to $s_{goal}$ determine the degree of control the agent can excercise in each step towards the solution. An agent has perfect control when all actions that are required to solve $X$ (i.e. reach $s_{goal}$) are available to it.

Finally, an agent with highest general competence is the one that, on average, exhibits highest performance when solving problems of various difficulty across different environments. Competence is a function of intelligence, knowledge and control. High competence leads to good performance. Amen.

Thought experiments

Making Archimedes a guineapig (sorry for that) we can now start to tease him with some experiments. Without a doubt, Archimedes was a very intelligent person. Based on his epochal accomplishments delivered some 2000 years ago, it is safe to say that his conceptual powers were comparable to those of Gauss, Leibniz, Einstein, Curie just to name a few. So why did not Archimedes produce a mathematical description of the normal distribution and a theory of relativity? Was he really that much dumber than the other guys and girls?

Of course not, he just lacked the knowledge. When task $X$ is the discovery of the theory of relativity and the historical steps leading to it begin from a prehistorical $s_0$ and end with the goal state of $s_{1000}$ then Archimedes stood at $s_{150}$, Leibniz at $s_{600}$ while Einstein started out from $s_{950}$ or so. Accordingly, for Archimedes to reach $s_{600}$ or $s_{950}$ in his lifetime would have been unthinkable.

Axioms of competence

This leads us to the first axiom of competence:

- Knowledge trumps intelligence

If Archimedes had the necessary knowledge he might well have figured out the bell curve, a solution to the Fermat’s last theorem (had it existed at the time) and made a stab at the theory of relativity. But he did not, because he lacked the shoulders of the giants to step on and take the leap. Using the wonderful gadgets that exist today, pretty much anyone can achieve feats that spoke of genious a 1000 years ago without boasting an exceptional intelligence. It is possible, because these gadgets are the physical representations of the knowledge that makes them possible. In other words, solving a problem by following instructions (knowledge) requires much less intelligence than intuiting the solution on your own. That is how knowledge trumps intelligence.

It might have well been that Archimedes was among the most intelligent and knowledgeable of his time. But was he the most powerful? The one with most control over the world affairs? Probably not. Most likely he was far from being even close to the pinnacle of power, because he was a scholar and did not command armies and tax revenues from millions of people.

This leads us to the second axiom of competence:

- Control trumps knowledge

No matter how intelligent and knowledgeable you are, if you are locked in a basement with no options to get out or incapacitated by other means, you will not make a dent on this universe, because you lack the control to do it. Intelligence is useful for generating new knowledge and knowledge is useful for obtaining more control. However, if you are just handed with a lot of control out of the box (e.g. inheriting the leadership from an autocratic ruler), you are bound to have an outsized effect on this world without exhibiting neither exceptional intelligence nor deep understanding of the world’s many regularities. It all goes to show that intelligence, knowledge and control are independent dimensions and any degree of these is allowed for agents operating in the real world.

How does this apply to machines?

Machines are also agents and this makes them subject to the axioms 1 and 2. You can have a very intelligent machine (capable of learning all sorts of relationships), but it will not come up with a design for the light sabre without the required knowledge. And knowledge is not obtained for free. By reading the Wikipedia or consuming the whole internet the agent will at best obtain the publicly available knowledge as of today. That is a tiny amount compared to the potential infinity of knowledge about the universe and certainly not enough for the light-sabre at this point. Accordingly, I am only marginally worried about the intelligence explosion advocated by Ray Kurzweil and Nick Bolstrom. Superb intelligence is just not sufficient to reach arbitrary goals as we saw in the Archimedes’ case. The only way to solve the problem at hand is to generate lots of new knowledge that will advance us towards the goal state. This takes careful planning and execution of many complicated experiments. The truth about the workings of the physical world is not simply handed out to those who are exceptionally intelligent. Very special circumstances need to be staged in order to decide whether a particular hypothesis is true or not. You can not circumvent this in a digital mind, because, without it, the search dedicated to the evaluation of the many chains of hypotheses will explode exponentially and put the lights out even in the brightest digital mind. It will be a really slow explosion when you have to perform a set of experiments in the physical world every time a new hypothesis is generated. My personal opinion is, that if an intelligence explosion happens in a machine, we will not even notice until we explicitly test for it. I suspect Jeff Hawkins and Andrew Ng might subcribe to a similar view.

The competence circuit

Based on the axioms 1 and 2 we can make the following statement: the competence of an agent is a function of its intelligence, knowledge and control where the effect of intelligence is gated by knowledge and the effect of knowledge is gated by control. The following block diagram represents the same idea in more detail:

When we talk about AI becoming potentially dangerous, we are really worried about the AI having control over the infrastructure that makes the world tick. Intelligence and knowledge are playing only supporting roles here as you can have a lot of control without them. Just imagine a random number generator attached to a trigger that will launch a nuke or a baby tapping away on an array of buttons that switch large electricity distribution systems on and off. Accordingly, what we should be worried about are our actions that increase the degree of control AI has over critical infrastructure.

Definitely the biggest step towards this nightmare is the launch of OpenAI ChatGPT plugins that grants the ChatGPT system with access to third party API-driven services. You can image the potential for trouble in any system (more or less intelligent) that is granted with control over critical financial and energy infrastructure. The responsibility here does not lie so much with the AI system, but with the party that granted the AI system with access to the infrastructure. Lack of basic governance around the degree of control intelligent systems are allowed to exercise over life supporting systems is what will make them dangerous. Intelligence itself has little to do with it.

Conclusion

Summing it all up, should we be worried? Sure, if we leave the governance issues to people with a startup mindset where governance is among the lowest priority issues. But we should not worry too much about the ever higher intelligence of machines. Instead, let’s worry about the limited intelligence we display when granting opaque decisionmaking systems with lots of control over our lives. Will a pause on training ever larger models help us? Not likely, because it misses the point. The systems that already have been trained can cause a lot of trouble as soon as we irresponsibly grant them with too much control.