World AI Cannes Festival 2023

Last week, the unexpectedly chilly Cannes hosted around 15k visitors in its annual World AI Cannes Festival. Three days packed with talks and exhibitions gave an overview of current trends in AI and showcased many exciting technologies. The timing was especially fortunate due to the peaking of the generative AI hype in the last couple of weeks. With AI on top and crypto, metaverse down, it will be exciting to see what’s around the corner. WAICF 2023 gave us a glimpse of that. Below is a summary of my notes from the event.

JoAnn Stonier (Mastercard)

The more interpretable AI models are, the easier it is for someone to comprehend and trust the model.

Explaining how/why the generative model is producing the current output is the biggest challenge in AI at the time.

1/3 of conversations in Davos World Economic Forum started with “Hey, do you know that ChatGPT generated X”

Generative AI benefits:

- hyperpersonalization

- complex problem solving

- customized service (achieved in the coming 6 months)

The query “Why is paleo diet the best” assumes that paleo is the best, which might not be the case (confirmation bias). There are also IP concerns with the derivations from the original - how much derivation is required to make the result original.

People who use a lot of generative AI could develop mental atrophy, because they are relying on machines to do the analytical thinking for them.

Nozha Boujemaa (IKEA)

Trustworthy AI requires company-wide awareness of the issues related to using AI.

Proof of trust enables responsible and beneficial use of data and algorithms for people, business and planet.

Michaela Schutt (Siemens)

Evaluated AI solutions for reqruitment 5 years ago with 5 major AI companies. Used a dump of job profiles, CV-s and outcomes. Devastating results. AI solved the recruiting problem by choosing “interest in soccer” as the most valuable predictor.

Accordingly, bias is a huge problem in applying AI to HR. You need transparency on the decision-making logic that is used by the model to arrive at the outcome. Also, humans have a lot of bias. Implemented dedicated bias testing for humans and for machines.

- AI will not replace decisionmaking in HR.

- Machines will do more and more of the work - so we need to think where does the human add most value?

Yann LeCun (Meta)

LLMs are very helpful as writing assistants (like autocomplete on steroids). But not so good for giving advice and answering questions. Some estimates say that they get only 60% of responses right. After the excitement of the first weeks people will realise where these systems can be effectively used and where not.

AI is already accelerating research in physics, biology and chemistry.

Transformers have transformed content moderation, hate speech detection and translation on social networks. Before transfomers 30% of hate-speech detected automatically, after transformers 95%.

Biggest limitation for current models:

- physical and geometric reasoning

- inability plan their actions

- humans and animals have common sense which the current ML models lack (world model)

Outlook for AI in the future:

Hierarchical planning - decompose the task into simpler tasks and decompose those all the way down to the millisecond domain of planning muscle control.

We will definitely have human level AI - but no idea when. New technology will transform, disrupt society but our children can live with it while we might perceive it as strange or evil.

Generative models will release a lot of creative juices. It will displace a lot of professions. Soon we will have systems that can generate music (at an aesthetic level comparable to Dall-E).

Aidan Gomez (cohere.ai)

Co-author of the original transformer paper and co-founder of https://cohere.ai

-

the abilities that ChatGPT exhibits today appered to be decades away 5 years ago

- knowledge work (low error tolerance) and creative applications (high error tolerance) will be automated to a substantial extent

- LLMs should cite their sources in order to make them more trustworthy (this work is underway e.g. @ DeepMind)

- Product problem - how to put these powerful technologies to the customer in a way that is safe and enables you to catch the bugs. At the moment, nobody has a clue how to solve this.

- LLM-s should get more agency: they should be able to use the tools that humans have built in order to do the research we have asked them to do

- Universal Transformers - underrated paper that will have a lot of impact in the coming years

- Whatever makes the training of transformers 10% cheaper will be able to save millions and kill the transformer

Thomas Wolf (Hugging Face)

Responsible AI: fair/not biased, trustable (based on some explicit criteria), harmless

Hugging Face expects to have in 1 year an open-source model available that is comparable to GPT-3.

There are no guarantees about the output of a generative model. You need an extra governance layer on top to make sure it will not generate unwanted content.

-

In 3 years, compute will be much cheaper so training of GPT-3 can be done on one workstation.

-

at the moment it seems that models are not the main bottleneck for AI progress but the availability of data

-

most impactful result of AGI would be to accelerate research and discovery

Michael Kearns (Unversity of Pennsylvania, Amazon scholar)

- optimizing for accuracy does not ensure equal accuracy across subgroups

Privacy is an issue: it should not be possible to extract training data from model.

Current deep-ML does not provide any rigorous guarantees of privacy.

Privacy guarantees - add noise to each particular data record without skewing the sample parameters.

Private synthetic data for training - preserves the statistical properties in the dataset without revealing attributes of individuals

Stuart Russell (Berkeley Unversity)

Still far from AGI, but when we succeed there will be 10x increase in world GDP and by acting responsibly we could lift the living standards of everyone on Earth to a respectable level.

One of unsolved problems:

- How do we get long-range thinking at multiple levels of abstraction (above, Yan Le Cun mentions hierarchical planning as a relevant solution).

Better AI with incompletely or incorrectly defined objectives will lead to worse outcomes for humankind.

catastrophe == loss of control over AI’s actions

The key is not in the intelligence:

Machines are intelligent to the extent that ther actions can be expected to achieve their objectives.

But whether the AI-s goals are aligned with ours:

Machines are beneficial to the extend that their actions can be expected to achieve our objectives.

It is in our best interests to build machines that:

-

must act in the best interests of humans

-

are explicitly uncertain about what those interests are and ask for permission

The solution can be formulated mathematically as an assistance game. Assistance game solvers exhibit deference, minimally invasive behavior, willingness to be switched off.

first order logic + world is probabilistic = first order probabilistic languages

Gary Marcus (AI-realist)

Did not present @ the conference, but posted the following on his substack channel on the topic of integrating web search with LLM-s:

Others, it should be noted, also encountered errors in relatively brief trials, eg CBS Mornings reported in their segment that in a fairly brief trial they encountered errors of geography, and hallucinations of plausible-sounding but fictitious establishments that didn’t exist.

…

“Kevin Scott, the chief technology officer of Microsoft, and Sam Altman, the chief executive of OpenAI, said in a joint interview on Tuesday that they expected these issues to be ironed out over time”.

…

Hallucinations are in their silicon blood, a byproduct of the way they compress their inputs, losing track of factual relations in the process.

…

I do actually think that these problems will eventually get ironed out, possibly after some important fresh discoveries are made, but whether they get ironed out soon is another matter entirely. Is the time course to rectifying hallucinations weeks? Months? Years? Decades? It matters.

If they don’t get ironed out soon —and they might not– people might quickly tire of chat based search, in which BS and truth are so hard to discriminate, and eventually find themselves returning to do their searches the old-fashioned, early 21st century way, awe or not.

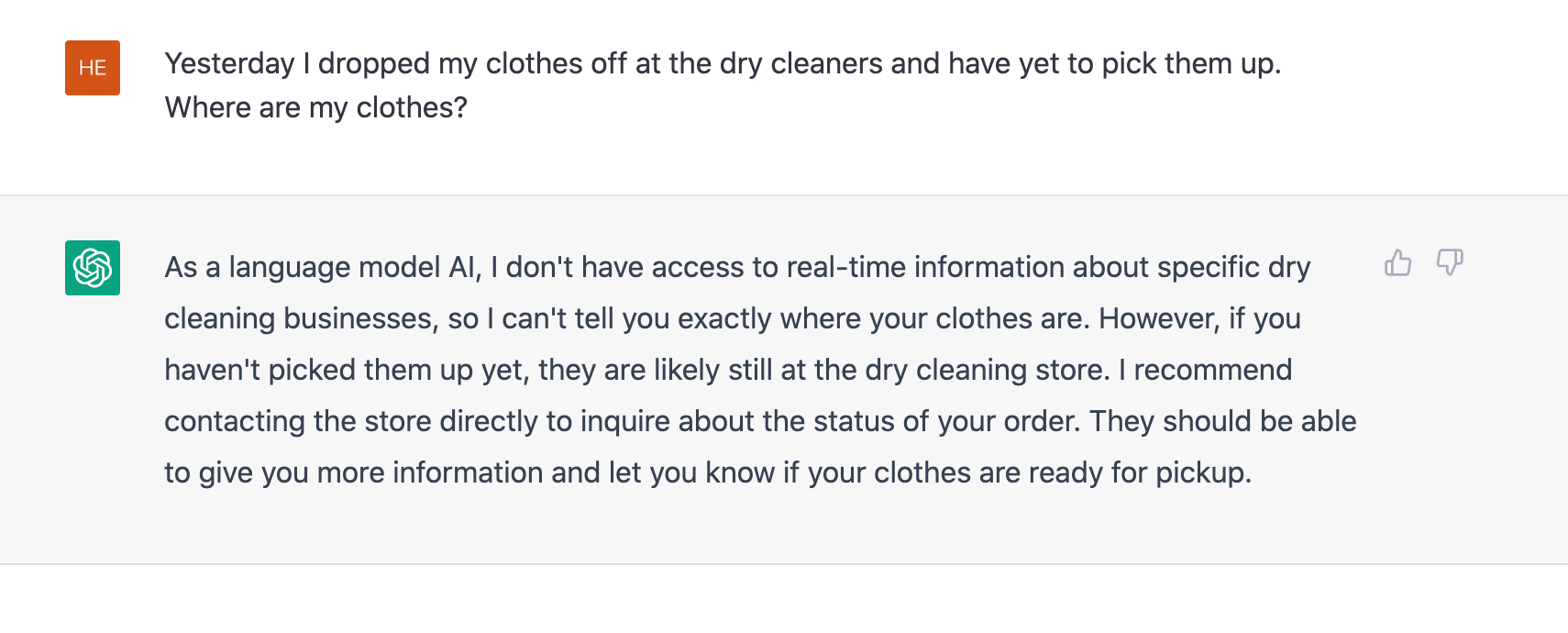

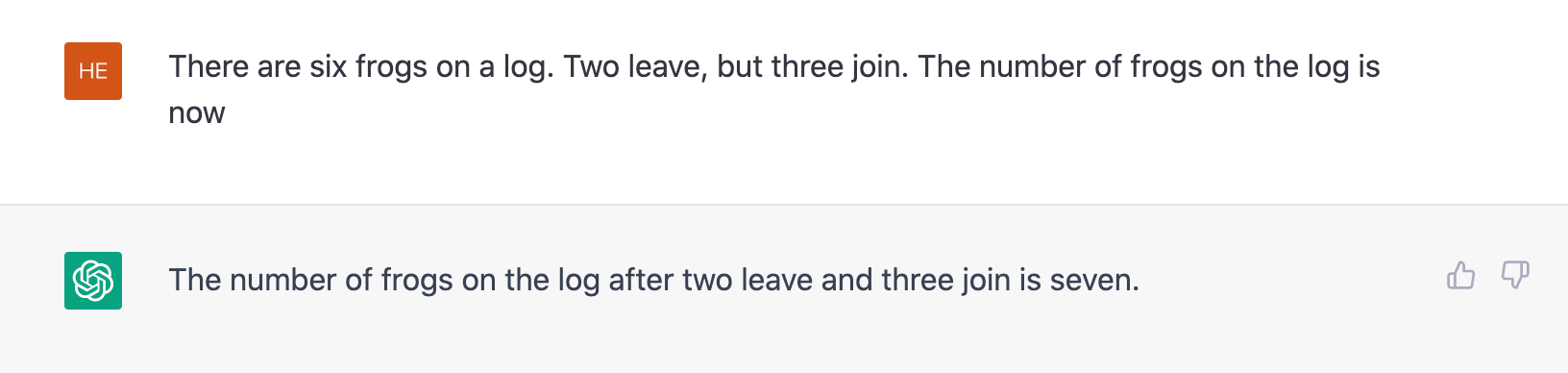

However, improvements of ChatGPT (GPT-3.5) over GPT-2 are clearly impressive even based on his own tests published in 2020:

Scaling neural network models—making them bigger—has made their faux writing more and more authoritative-sounding, but not more and more truthful.

The Next Decade in AI: Four Steps Towards Robust Artificial Intelligence @ 2020

Results from ChatGPT (Feb 2023):

Miscellaneous notes

The essence of learning and intelligence seems to be evolve mostly around generalization across entities

the world has things in it i.e. entities

You have to be able to generalize across objects (humans, pawns, days).

[the] capacity to be affected by objects, must necessarily precede all intuitions of these objects,.. and so exist[s] in the mind a priori. Immanuel Kant

Expressive power is the amount of generalization capability exhibited by the language.

Acute issues in AI (besides environmental impact, bias etc) are about control and guarantees

If you delegate decision-making control to AI then you want to have some guarantees i.e. you need a proof of trust:

- guarantees about the range of possible responses/decisions it will make

- guarantees about the accuracy of responses/decision with respect to input

Why is everybody talking about ChatGPT?

The broad appeal of ChatGPT is mostly due to the fact that text is so pervasive in our life.

Are LLM-s good because they are very flexible at tracking conditional probability distributions (i.e. correlations) or are they actually building world models based on text?

When an LLM was trained on sequences of moves from the board game Othello, the subsequent analysis and tests on the network produced credible evidence supporting the notion that large language models are developing world models and relying on them to generate sequences.